Why the hell would we need AI summaries of a wikipedia article? The top of the article is explicitly the summary of the rest of the article.

Technology

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related news or articles.

- Be excellent to each other!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, this includes using AI responses and summaries. To ask if your bot can be added please contact a mod.

- Check for duplicates before posting, duplicates may be removed

- Accounts 7 days and younger will have their posts automatically removed.

Approved Bots

Even beyond that, the "complex" language they claim is confusing is the whole point of Wikipedia. Neutral, precise language that describes matters accurately for laymen. There are links to every unusual or complex related subject and even individual words in all the articles.

I find it disturbing that a major share of the userbase is supposedly unable to process the information provided in this format, and needs it dumbed down even further. Wikipedia is already the summarized and simplified version of many topics.

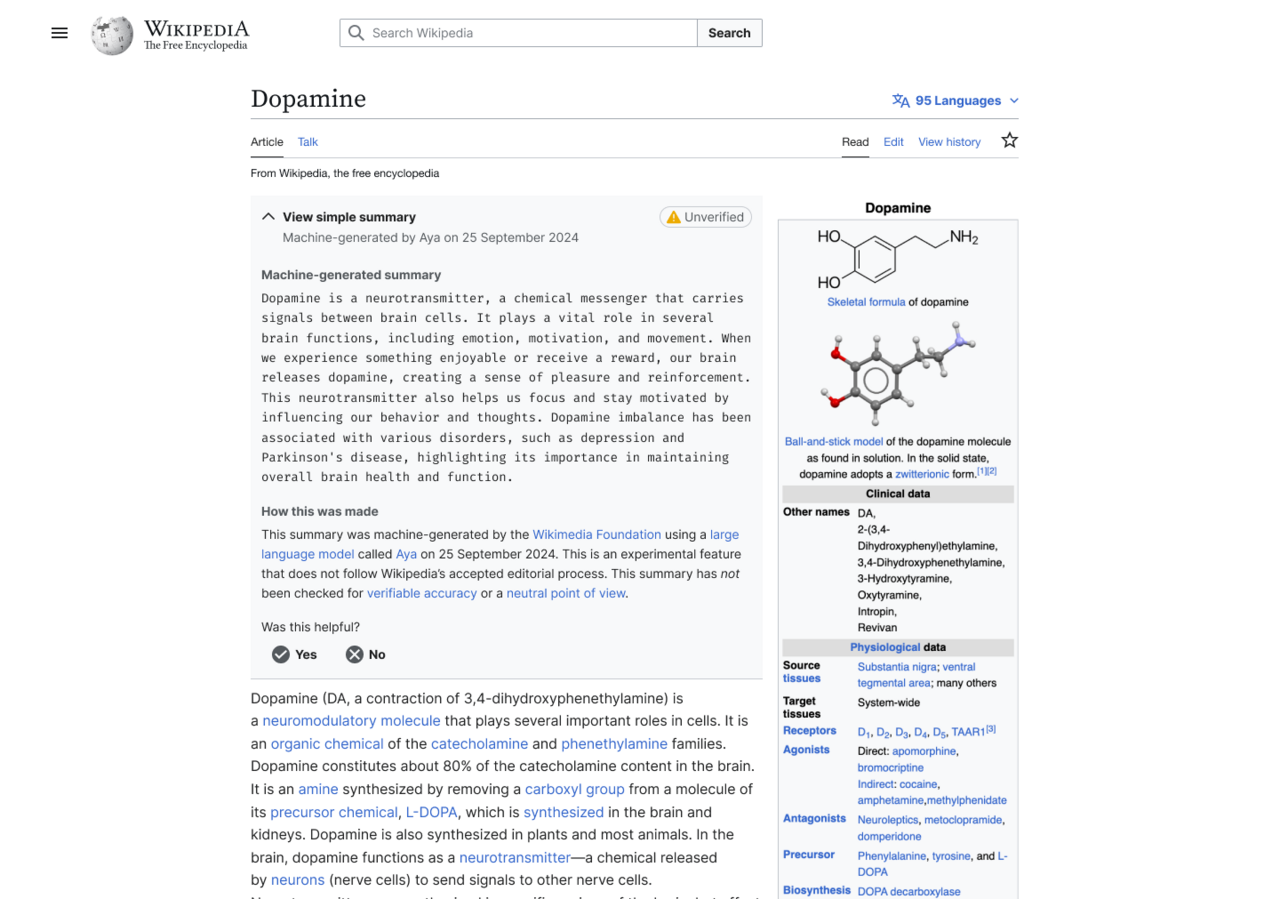

A page detailing the the AI-generated summaries project, called “Simple Article Summaries,” explains that it was proposed after a discussion at Wikimedia’s 2024 conference, Wikimania, where “Wikimedians discussed ways that AI/machine-generated remixing of the already created content can be used to make Wikipedia more accessible and easier to learn from.” Editors who participated in the discussion thought that these summaries could improve the learning experience on Wikipedia, where some article summaries can be quite dense and filled with technical jargon, but that AI features needed to be cleared labeled as such and that users needed an easy to way to flag issues with “machine-generated/remixed content once it was published or generated automatically.”

The intent was to make more uniform summaries, since some of them can still be inscrutable.

Relying on a tool notorious for making significant errors isn't the right way to do it, but it's a real issue being examined.

In thermochemistry, an exothermic reaction is a "reaction for which the overall standard enthalpy change ΔH⚬ is negative."[1][2] Exothermic reactions usually release heat. The term is often confused with exergonic reaction, which IUPAC defines as "... a reaction for which the overall standard Gibbs energy change ΔG⚬ is negative."[2] A strongly exothermic reaction will usually also be exergonic because ΔH⚬ makes a major contribution to ΔG⚬. Most of the spectacular chemical reactions that are demonstrated in classrooms are exothermic and exergonic. The opposite is an endothermic reaction, which usually takes up heat and is driven by an entropy increase in the system.

This is a perfectly accurate summary, but it's not entirely clear and has room for improvement.

I'm guessing they were adding new summaries so that they could clearly label them and not remove the existing ones, not out of a desire to add even more summaries.

Wikimedians discussed ways that AI/machine-generated remixing of the already created content can be used to make Wikipedia more accessible and easier to learn from

The entire mistake right there. Look no further. They saw a solution (LLMs) and started hunting for a problem.

Had they done it the right way round there might have been some useful, though less flashy, outcome. I agree many article summaries are badly written. So why not experiment with an AI that flags those articles for review? Or even just organize a community drive to clean up article summaries?

The questions are rhetorical of course. Like every GenAI peddler they don't have an interest in the problem they purport to solve, they just want to play with or sell you this shiny toy that pretends really convincingly that it is clever.

some article summaries can be quite dense and filled with technical jargon, but that Al features needed to be cleared labeled as such and that users needed an easy to way to flag issues with "machine-generated/remixed content once it was published or generated automatically.

I feel like if they feel that this is an issue generate the summary in the talk page and have the editors refine and approve it before publishing. Alternatively set an expectation that the article summaries are in plain English.

And what about simple wikipedia?

I know one study found that 51% of summaries that AI produced for them contained significant errors. So AI-summaries are bad news for anyone who hopes to be well informed. source https://www.bbc.com/news/articles/c0m17d8827ko

I'm so tired of "AI". I'm tired of people who don't understand it expecting it to be magical and error free. I'm tired of grifters trying to sell it like snake oil. I'm tired of capitalist assholes drooling over the idea of firing all that pesky labor and replacing them with machines. (You can be twice as productive with AI! But you will neither get paid twice as much nor work half as many hours. I'll keep all the gains.). I'm tired of the industrial scale theft that apologists want to give a pass to while individuals who torrent can still get in trouble, and libraries are chronically under funded.

It's just all bad, and I'm so tired of feeling like so many people are just not getting it.

I hope wikipedia never adopts this stupid AI Summary project.

People not getting things that seem obvious is an ongoing theme, it seems. We sat through a presentation at work by some guy who enthusiastically pitched AI to the masses. I don't mean that's what he did, I mean "enthusiasm" seemed to be his ONLY qualification. Aside from telling folks what buttons to press on the company's AI app, he didn't know SHIT. And the VP got on before and after and it was apparent that he didn't know shit, either. Someone is whispering in these people's ears and they're writing fat checks, no doubt, and they haven't a clue what an LLM is, what it is good at, nor what to be wary of. Absolutely ridiculous.

"Pause" and not "Stop" is concerning.

Is it just me, or was the addition of AI summaries basically predetermined? The AI panel probably would only be attended by a small portion of editors (introducing selection bias) and it's unclear how much of the panel was dedicated to simply promoting the concept.

I imagine the backlash comes from a much wider selection of editors.

Wikimedia has too much money, maybe this has started to create rotten tumors inside it.

Good, we don't need LLMs crowbarred into everything. You don't need a summary of an encylopedia article, it is already a broad overview of a complex topic.

when wikipedia starts to publish ai generated content it will no longer be serving its purpose and it won't need to exist anymore

Why would anyone need Wikipedia to offer the AI summaries? Literally all chat bots with access to the internet will summarize Wikipedia when it comes to knowledge based questions. Let the creators of these bots serve AI slop to the masses.

there's a summary paragraph at the top of each article which is written by people who have assholes probably. it's the whole reason to use wikipedia at this point

This was my very first thought as well. The first section of almost every Wikipedia article is already a summary.

If I wanted an AI summary, I'd put the article into my favourite LLM and ask for one.

I'm sure LLMs can take links sometimes.

And if Wikipedia wanted to include it directly into the site...make it a button, not an insertion.

The main issue I have as an editor is that there is no straightforward way to retrain the LLM to correct faulty training as directly or revertably as the existing method of editing an article's wikicode. Already, much of my time updating Wikipedia is spent parsing puffery and removing phrases like “award-winning” or “renowned”, inserted by malicious advertisers trying to use Wikipedia as a free billboard. If a Wikipedia LLM began making subjective claims instead of providing objective facts backed by citations, I would have to teach myself machine learning and get involved with the developers who manage the LLM's training. That raises the bar for editor technical competency which Wikipedia historically has been striving to lower (e.g. Visual Editor).

Summaries for complex Wikipedia articles would be great, especially for people less knowledgeable of the given topic, but I don't see why those would have to be AI-generated.

the Top section of each wikipedia article is already a summary of the article

Fucking thank you. Yes, experienced editor to add to this: that's called the lead, and that's exactly what it exists to do. Readers are not even close to starved for summaries:

- Every single article has one of these. It is at the very beginning – at most around 600 words for very extensive, multifaceted subjects. 250 to 400 words is generally considered an excellent window to target for a well-fleshed-out article.

- Even then, the first sentence itself is almost always a definition of the subject, making it a summary unto itself.

- And even then, the first paragraph is also its own form of summary in a multi-paragraph lead.

- And even then, the infobox to the right of 99% of articles gives easily digestible data about the subject in case you only care about raw, important facts (e.g. when a politician was in office, what a country's flag is, what systems a game was released for, etc.)

- And even then, if you just want a specific subtopic, there's a table of contents, and we generally try as much as possible (without harming the "linear" reading experience) to make it so that you can intuitively jump straight from the lead to a main section (level 2 header).

- Even then, if you don't want to click on an article and just instead hover over its wikilink, we provide a summary of fewer than 40 characters so that readers get a broad idea without having to click (e.g. Shoeless Joe Jackson's is "American baseball player (1887–1951)").

What's outrageous here isn't wanting summaries; it's that summaries already exist in so many ways, written by the human writers who write the contents of the articles. Not only that, but as a free, editable encyclopedia, these summaries can be changed at any time if editors feel like they no longer do their job somehow.

This not only bypasses the hard work real, human editors put in for free in favor of some generic slop that's impossible to QA, but it also bypasses the spirit of Wikipedia that if you see something wrong, you should be able to fix it.

Yeah this screams "Let's use AI for the sake of using AI". If they wanted simpler summaries on complex topics they could just start an initiative to have them added by editors instead of using a wasteful, inaccurate hype machine

Why is it so damned hard for coporate to understand most people have no use nor need for ai at all?

"It is difficult to get a man to understand something, when his salary depends on his not understanding it."

— Upton Sinclair

Wikipedia management shouldn't be under that pressure. There's no profit motive to enshittify or replace human contributions. They're funded by donations from users, so their top priority should be giving users what they want, not attracting bubble-chasing venture capital.

I get that the simple language option exists, and i definitely think I'm not qualified to really argue what Wikipedia should or should not do. But I wanted to share what my lemmy feed looked like when I clicked into this post and I gotta say, I sorta get it.

Good! I was considering stopping my monthly donation. They better kill the entire "machine-generated" nonsense instead of just pausing, or I will stop my pledge!

If they have enough money to burn on LLM results, they clearly have enough and I don't need to keep donating mine.

Good! I was considering stopping my monthly donation.

Ditto. I don't want to overreact, but it's not a good look.

I mean, the LLM thing has a proper field for deployment - it can handle the translation of articles that just don't exist in your language. But it should be a button a person clicks with their consent, not an article they get by default, not a content they get signed by the Wikipedia itself. Nowadays, it's done by browsers themselves and their extensions.

Who could have know, in this day and age, that this would be met with backlash? Truly an unprecedented occurance.

I bet they will try again.

Wouldn't be surprised, since "no" as a full sentence does not exist in tech or online anymore - it's always "yes" or "maybe later/not now/remind me next time" or other crap like that...

Didn't they just pass a site-wide decision on the use of LLMs in creating/editing otherwise "human made" text?

Why do they need to take the human element out? Why would anyone want them to?

God I hope this isn't the beginning of the end for Wikipedia. They live and die on the efforts of volunteer editors (like Reddit relied on volunteer mods and third party tool devs). The fastest way to tank themselves is by driving off their volunteers with shit like this.

And it's absurdly easier to lose the good will they have than to rebuild it.

Wait until you learn about their budget. When they do donation drives with a sad jimmy wales face they make it seem like Wikipedia is about to go offline. However if they were only hosting Wikipedia they already have enough money to do that pretty much until the end of time. However the foundation spends a ton of money on the pet causes of the board members. The causes aren’t necessarily bad or anything, but misleading donors like that is super messed up.

On the one hand, it’s insulting to expect people to write entries for free only to have AI just summarize the text and have users never actually read those written words.

On the other hand, the future is people copying the url into chat gpt and asking for a summary.

The future is bleak either way.

On the third hand some of us just want to be able to read a fucking article with information instead of a tiktok or ai generated garbage. That's wikipedia, at least it used to be before this garbage. Hopefully it stays true

These summaries are useless anyways because the AI hallucinates like crazy... Even the newest models constantly make up bullshit.

It can't be relied on for anything, and it's double work reading the words it shits out and then you still gotta double check it's not made up crap.

Yes, throw out the one thing that differentiates you from the unreliable slop.

Aaaaarrgg! This is horrible they stopped AI summaries, which I was hoping would help corrupt a leading institution protecting free thought and transfer of knowledge.

Sincerely, the Devil, Satan