Not immediate failure—that’s the trap. Initial metrics look great. You ship faster. You feel productive.

And all they'll hear is "not failure, metrics great, ship faster, productive" and go against your advice because who cares about three months later, that's next quarter, line must go up now. I also found this bit funny:

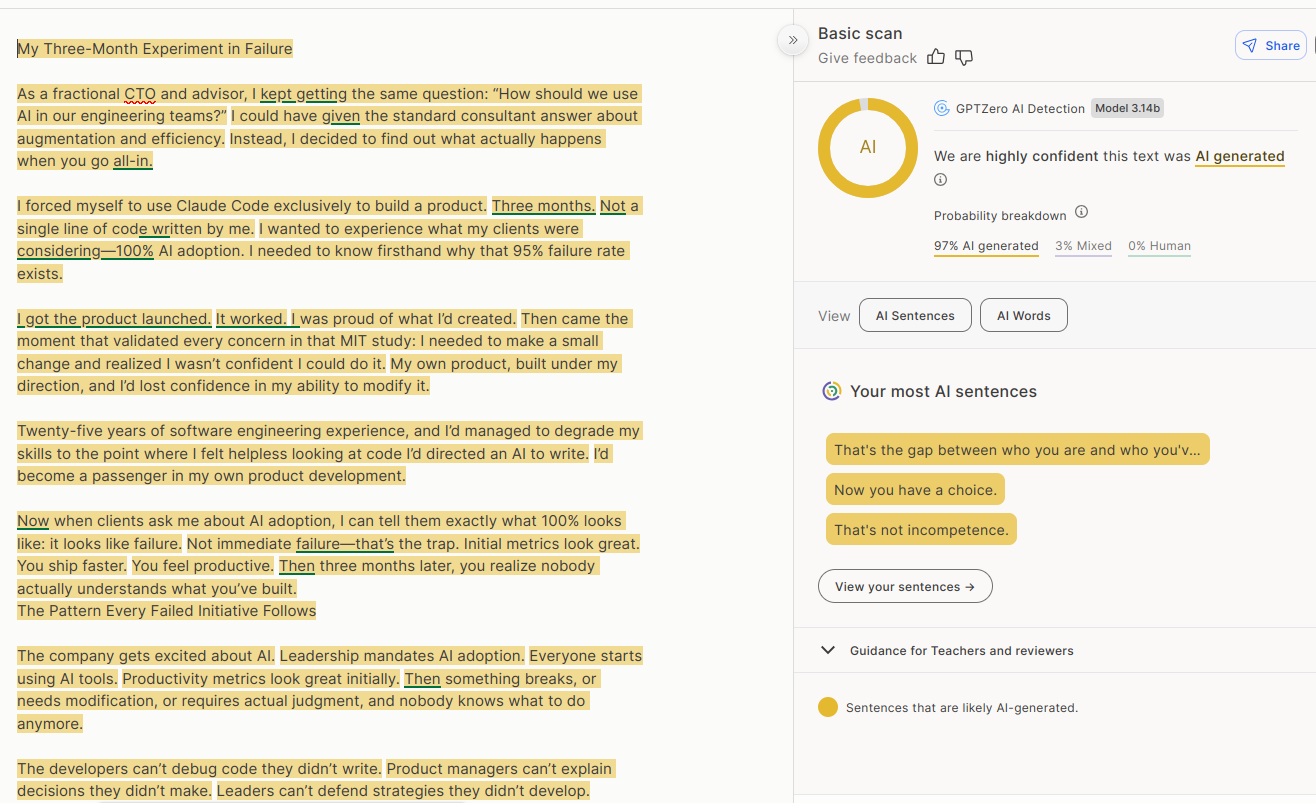

I forced myself to use Claude Code exclusively to build a product. Three months. Not a single line of code written by me... I was proud of what I’d created.

Well you didn't create it, you said so yourself, not sure why you'd be proud, it's almost like the conclusion should've been blindingly obvious right there.