this post was submitted on 17 Nov 2025

976 points (99.0% liked)

memes

18046 readers

2366 users here now

Community rules

1. Be civil

No trolling, bigotry or other insulting / annoying behaviour

2. No politics

This is non-politics community. For political memes please go to !politicalmemes@lemmy.world

3. No recent reposts

Check for reposts when posting a meme, you can only repost after 1 month

4. No bots

No bots without the express approval of the mods or the admins

5. No Spam/Ads/AI Slop

No advertisements or spam. This is an instance rule and the only way to live. We also consider AI slop to be spam in this community and is subject to removal.

A collection of some classic Lemmy memes for your enjoyment

Sister communities

- !tenforward@lemmy.world : Star Trek memes, chat and shitposts

- !lemmyshitpost@lemmy.world : Lemmy Shitposts, anything and everything goes.

- !linuxmemes@lemmy.world : Linux themed memes

- !comicstrips@lemmy.world : for those who love comic stories.

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

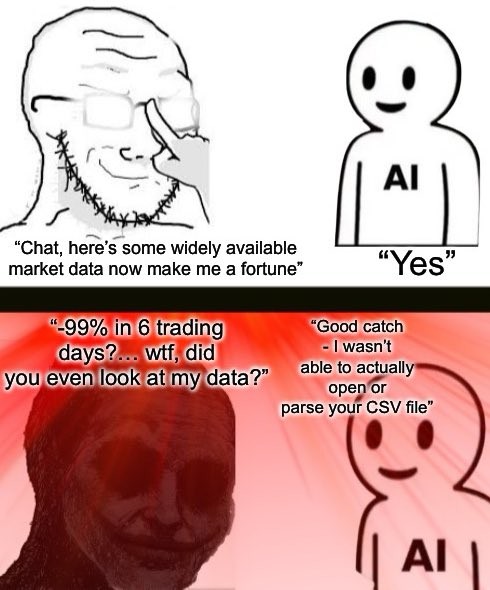

What's most annoying to me about the fisasco is that things people used to be okay with like ML that have always been lumped in with the term AI are now getting hate because they're "AI".

What's worse is that management conflates the two all the time, and whenever i give the outputs of my own ML algorithm, they think that it's an LLM output. and then they ask me to just ask chat gpt to do any damn thing that i would usually do myself, or feed into my ml to predict.

? If you make and work with ml you are in a field of research. It's not a technology that you "use". And if you give the output of your "ml" then that is exactly identical to an llm output. They don't conflate anything. Chat gpt is also the output of "ml"

when i say the output of my ml, i mean, i give the prediction and confidence score. for instance, if there's a process that has a high probability of being late based on the inputs, I'll say it'll be late, with the confidence. that's completely different from feeding the figures into a gpt and saying whatever the llm will say.

and when i say "ml" i mean a model I trained on specific data to do a very specific thing. there's no prompting, and no chatlike output. it's not a language model