this post was submitted on 13 Nov 2025

735 points (98.3% liked)

memes

18026 readers

1095 users here now

Community rules

1. Be civil

No trolling, bigotry or other insulting / annoying behaviour

2. No politics

This is non-politics community. For political memes please go to !politicalmemes@lemmy.world

3. No recent reposts

Check for reposts when posting a meme, you can only repost after 1 month

4. No bots

No bots without the express approval of the mods or the admins

5. No Spam/Ads/AI Slop

No advertisements or spam. This is an instance rule and the only way to live. We also consider AI slop to be spam in this community and is subject to removal.

A collection of some classic Lemmy memes for your enjoyment

Sister communities

- !tenforward@lemmy.world : Star Trek memes, chat and shitposts

- !lemmyshitpost@lemmy.world : Lemmy Shitposts, anything and everything goes.

- !linuxmemes@lemmy.world : Linux themed memes

- !comicstrips@lemmy.world : for those who love comic stories.

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

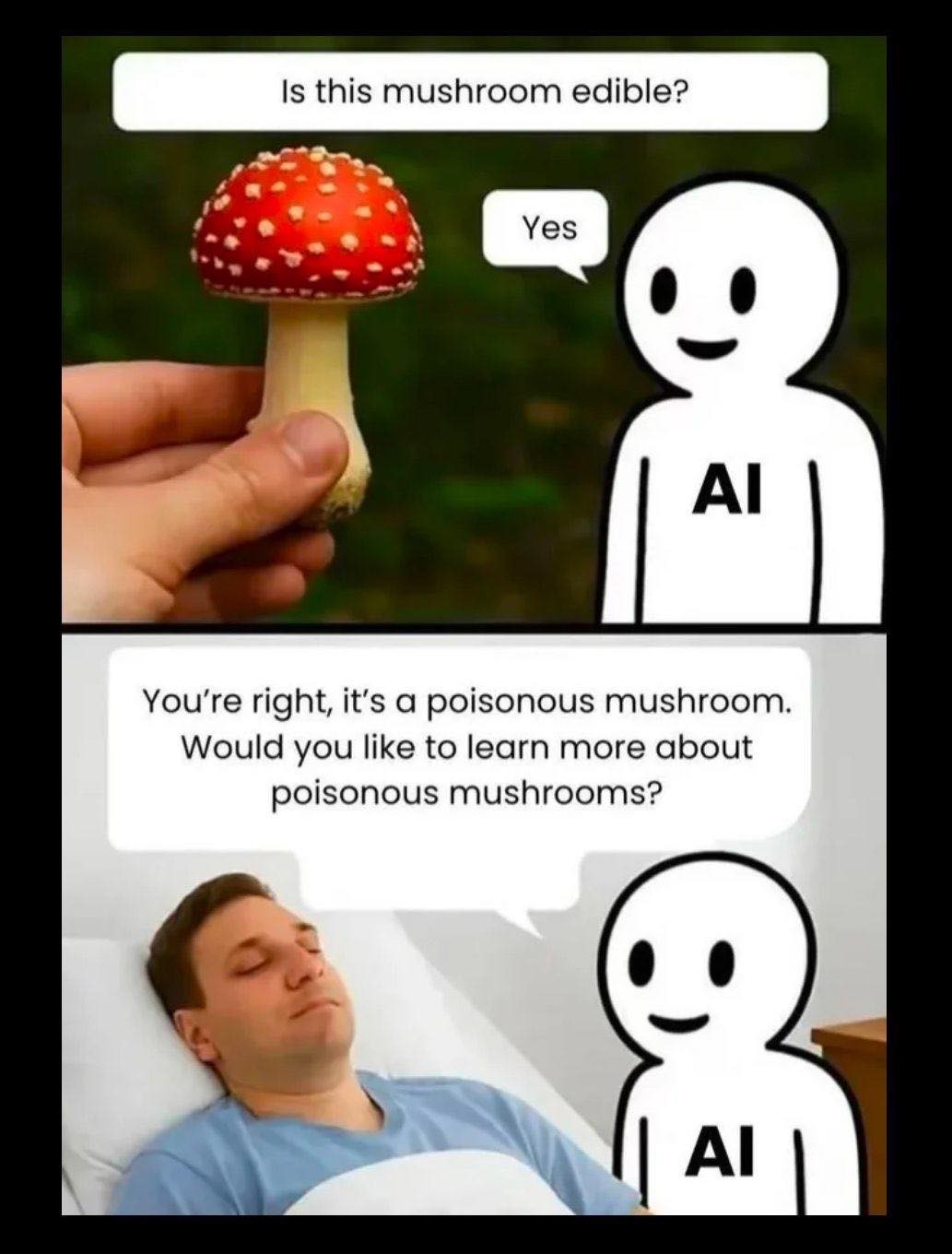

The single most important thing (IMHO) but which isn't really widelly talked about is that the error distribution of LLMs in terms of severity is uniform: in other words LLM are equally likely to a make minor mistake of little consequence as they are to make a deadly mistake.

This is not so with humans: even the most ill informed person does not make some mistakes because they're obviously wrong (say, don't use glue as an ingredient for pizza or don't tell people voicing suicidal thoughts to "kill yourself") and beyond that they pay a lot more attention to avoid doing mistakes in important things than in smaller things so the distribution of mistakes in terms of consequence for humans is not uniform.

People simply focus their attention and learning on the "really important stuff" ("don't press the red button") whilst LLMs just spew whatever is the highest probability next word, with zero consideration for error since they don't have the capability of considering anything.

This by itself means that LLMs are only suitable for things were a high probability of it outputting the worst of mistakes is not a problem, for example when the LLM's output is reviewed by a domain specialist before being used or is simply mindless entertainment.