this post was submitted on 13 Nov 2025

735 points (98.3% liked)

memes

18026 readers

1095 users here now

Community rules

1. Be civil

No trolling, bigotry or other insulting / annoying behaviour

2. No politics

This is non-politics community. For political memes please go to !politicalmemes@lemmy.world

3. No recent reposts

Check for reposts when posting a meme, you can only repost after 1 month

4. No bots

No bots without the express approval of the mods or the admins

5. No Spam/Ads/AI Slop

No advertisements or spam. This is an instance rule and the only way to live. We also consider AI slop to be spam in this community and is subject to removal.

A collection of some classic Lemmy memes for your enjoyment

Sister communities

- !tenforward@lemmy.world : Star Trek memes, chat and shitposts

- !lemmyshitpost@lemmy.world : Lemmy Shitposts, anything and everything goes.

- !linuxmemes@lemmy.world : Linux themed memes

- !comicstrips@lemmy.world : for those who love comic stories.

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

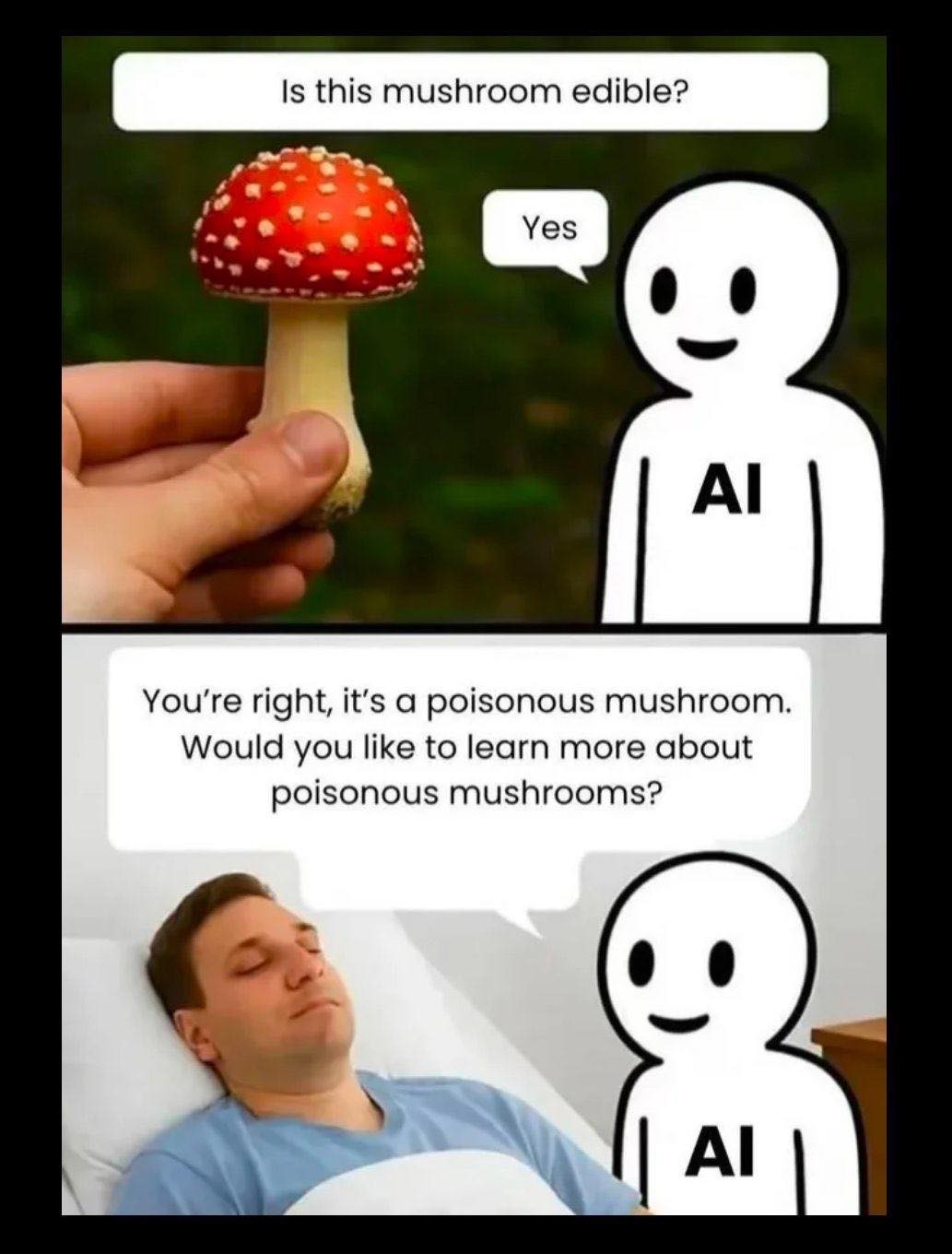

LLMs are an incredible interface to other systems. Why learn a new system language to get information's when you can use natural language to ask and the AI will translate into the system language and do the lookup for you and then translate the result into natural language again. The important part is, the AI never gives an answer to your question itself, it just translates between human language and system language.

Use it as a language machine and never as a knowledge machine!

AI's tendency to hallucinate means that for it to be actually reliable, a human needs to double-check all of its output. If it is being used to acquire and convey information of any kind to the prompter, you might as well just skip the AI and find the information manually, as you'd have to do that anyway to validate what it told you.

And AI hallucinations are a side effect of the fundamental way in which generative AI works - they will never be 100% accounted for. When an AI generates text, it is simply predicting what word is likely to come next based on its prompt in relation to its training data. While this predictive ability has become remarkably sophisticated within the last few years (more than I thought it ever would, tbh), it is still only a predictive text generator. It's not "translating," "understanding," or "comprehending" anything about whatever subject it has been asked about - it is merely predicting the likelihood of the next word in its response based on its training data.