this post was submitted on 09 Jul 2025

1457 points (99.6% liked)

Greentext

7350 readers

462 users here now

This is a place to share greentexts and witness the confounding life of Anon. If you're new to the Greentext community, think of it as a sort of zoo with Anon as the main attraction.

Be warned:

- Anon is often crazy.

- Anon is often depressed.

- Anon frequently shares thoughts that are immature, offensive, or incomprehensible.

If you find yourself getting angry (or god forbid, agreeing) with something Anon has said, you might be doing it wrong.

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

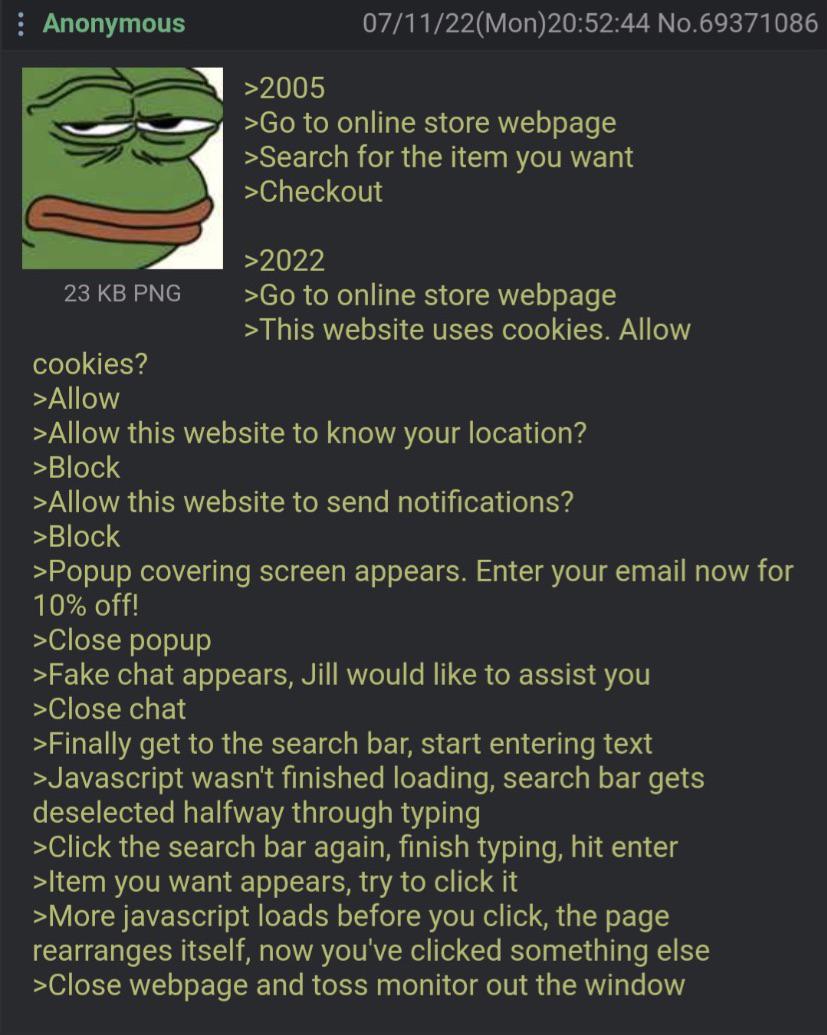

And everything is SO FUCKING SLOW. I swear my old Celeron 300A at 500mhz running Windows 98 and SUSE Linux was super responsive. Everything you clicked just responded right away, everything felt smooth and snappy. Chatting with people over the internet using ICQ or MSN was basically instant, all the windows opened instantly, typing had zero latency and sending messages was instant.

My current Ryzen 5950X is not only a billion times faster, it also has 16 times the number of cores. I have hundreds of times the RAM as I had HDD capacity on that old system. Yet everything is slower, typing has latency, starting up Teams takes 5 minutes. Doing anything is slow, everything has latency and you need to wait for things to finish loading and rendering unless you want everything to mess up and you'd have to wait even more.

In the 90s, a lot of programmers spent a lot of time carefully optimizing everything, on the theory that every CPU cycle counted. And in the decades since, it's gotten easier than ever to write software, but the craft of writing great software has stalled compared to the ease of writing mediocre software. "Why shouldn't we block on a call to a remote service? Computers are so fast these days"

The flip side of that is entire classes of bugs being removed from modern software.

The differences are primarily languages. A GUI in the 90s was likely programmed with C/C++. Increasingly, it's now done in languages that have complex runtime environments like dotnet, or what is effectively a browser tab written with browser languages.

Those C/C++ programs almost always had buffer overflows. Which were taken off of the OWASP Top 10 back in 2007, meaning the industry no longer considers it a primary threat. This should be considered a huge success. Related issues, like dynamic memory mismanagement, are also almost gone.

There are ways to take care of buffer overflows without languages in complex managed runtimes, such as what Go and Rust do. You can have the compiler produce ASM that does array bounds checking every time while only being a smidge slower than C/C++. With SSDs all but removing the excuse that disk IO is the limiting factor, this is increasingly the way to go.

The industry had good reasons to use complex runtimes, though some of the reasons are now changing.

Oh, and look at what old games did to optimize things, too. The Minus World glitch in Super Mario Bros--rooted in uninitialized values of a data structure that needed to be a consistent shape--would be unlikely to happen if it were written in Python, and almost certainly wouldn't happen in Rust. Optimizations tend to make bugs all their own.

While there's an overhead to safer runtime environments, I wouldn't put much blame there. I feel like "back in the day" when something was inefficient you noticed it quicker because it had a much larger impact, windows would stop updating, the mouse would get laggy, music would start stuttering. These days you can take up 99% of the CPU time and the system will still chug along without any of those issues showing.

I remember early Twitter had a "famous" performance issue, where the sticky heading bar would slow systems down, because they were re-scanning the entire page DOM on every scroll operation to find and adjust the header, rather than just caching a reference to it. Meanwhile yesterday I read an article about the evolution of the preferences UI in Apple OSs, that showed them off by running each individual version of said OS in VMs embedded within the page. It wasn't snappy, but it didn't have the "entire system slows down and stops responding" issues you saw a decade or so ago.

Basically, devs aren't being punished (by tooling) for being inefficient, so they don't notice when they are, and newer devs never realise they need to.

One thing I love about the Linux/FOSS world is that people work on software because they care about it. This leads to them focusing on parts of the system that users often also care about, rather than the parts that Product Management calculated could best grow engagement and revenue per user over the next quarter.

I’m not arguing that all these big frameworks and high level languages are bad, by the way. Making computers and programming accessible is a huge positive. I probably even use some of their inefficient creations that simply would not exist otherwise. And for many small or one-off applications, the time saved in programming is orders of magnitude higher than the time saved waiting on execution.

But when it comes to the most performance sensitive utilities and kernel code in my GNU plus Linux operating system, efficiency gets way more important and I’ll stick with the stuff that was forged and chiseled from raw C over decades by the greybeards.

"If you have resources, why shouldn't MY website be using 100% of it?" - web developers since 2017

Oh my fuck, my work has a website and I hate it. There are multiple fields to fill out on a page, and every time you fill one field, the entire page automatically refreshes. I can’t just tab from field to field and fill things out - I have to fill out a field, wait for the refresh, click in the next field, fill it out, wait for the refresh, click in the next field…. until I’m done.

Next, for some reason everything is a floating window and there is no scroll outside of it. Which means that if I click the page wrong, the floating window moves, and I can’t move it back. I lose all progress because the only way to fix it is to refresh the site.

Then there’s the speed. At the end of the day, when everyone is using this site, it gets extremely slow. You’d think this would be a predictable issue that the company could be proactive about, yet every day, right when we’re itching the most to go home, every one of us experiences the dreaded lag. I hadn’t seen lag this bad since I played Sims 2 on an old computer.

That’s because in the Celeron 266-300A-350 days we overclockers were as gods! And if you had just moved from a modem connection to a university LAN connection like me, it was peak computer usage.

The way you describe performance then and now makes me wonder if you’re thinking mostly about running SUSE back then and if you’re talking about a Windows (Teams) machine now. I definitely remember things like the right-click menu taking forever to load sometimes on old windows & HDD based systems.

Using Linux on my work & home PCs now after being used to Windows on them first, they have that responsive feel back.

I use Arch BTW.

Teams runs on just about anything, which is part of why it's so slow.

Back in the day Windows 98 was definitly faster than SUSE on my machine. Drivers back then on Linux were rough and if you wanted to play a game you'd need Windows or DOS for sure.

I only had 56k dialup back then, no fast internet for me.

Gotcha. Something about what you said made it sound like the standard windows flavor to me.

Maybe it’s because I’ve gotten so used to running teams in a browser tab that its lagginess just feels like a slow loading webpage refresh, while the rest of the system’s GUI is flawless.

It's a two-fold curse - first, every single program these days isn't a stand-alone program, it's a glorified web browser. Hand-in-hand with that is the fact that, in order for these webpages-disguised-as-programs to behave in the way you normally expect a modern UI to act, it has to have five layers of javascript frameworks, each adding its own pile of cruft to the slagheap that is modern app design. It's horrendous and I hate it.