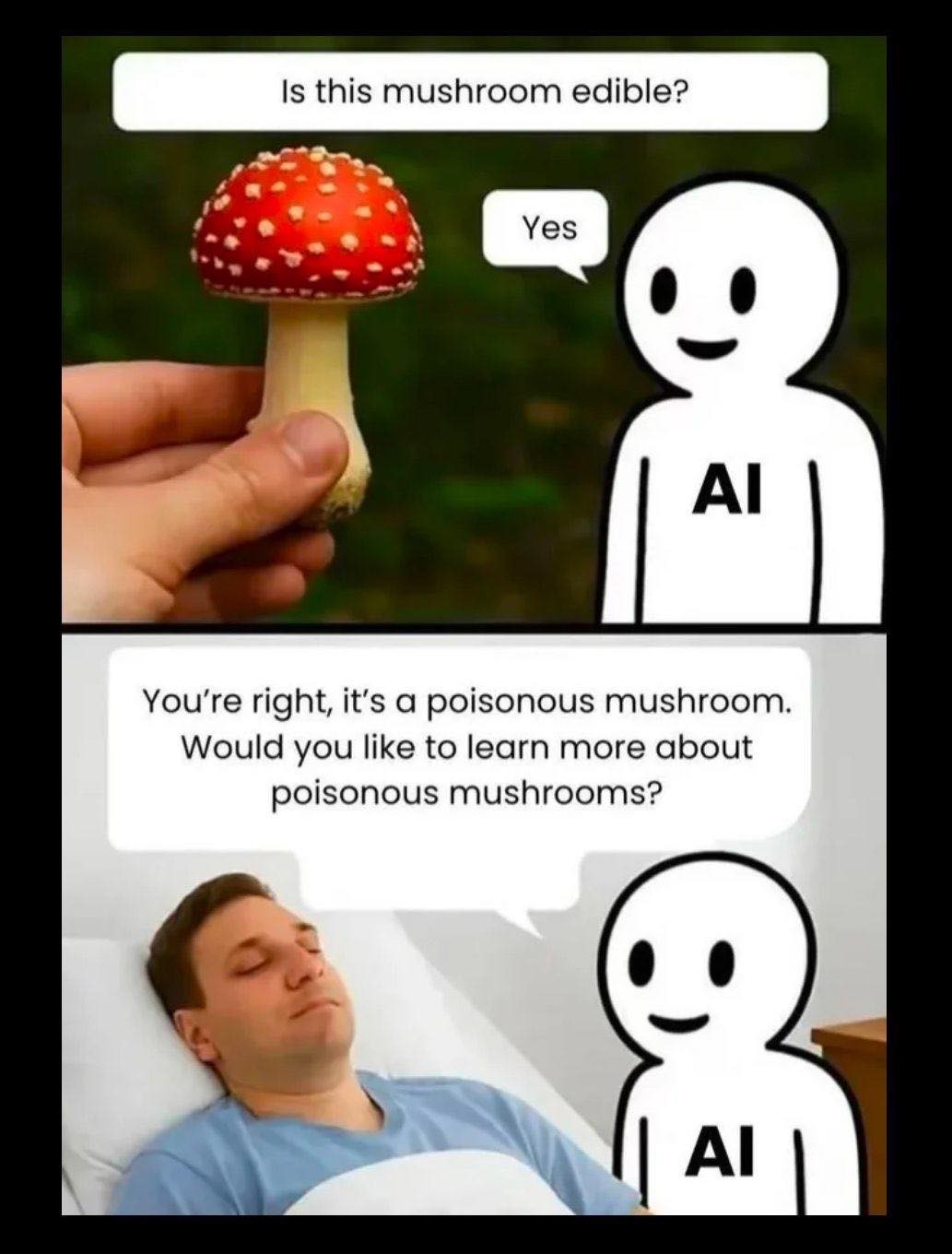

Don't rely on it for anything, period. (See Caelan Conrad's recent videos on how ChatGPT causes deaths, tw: talk about suicides)

https://www.youtube.com/watch?v=hNBoULJkxoU

https://www.youtube.com/watch?v=JXRmGxudOC0

memes

Community rules

1. Be civil

No trolling, bigotry or other insulting / annoying behaviour

2. No politics

This is non-politics community. For political memes please go to !politicalmemes@lemmy.world

3. No recent reposts

Check for reposts when posting a meme, you can only repost after 1 month

4. No bots

No bots without the express approval of the mods or the admins

5. No Spam/Ads/AI Slop

No advertisements or spam. This is an instance rule and the only way to live. We also consider AI slop to be spam in this community and is subject to removal.

A collection of some classic Lemmy memes for your enjoyment

Sister communities

- !tenforward@lemmy.world : Star Trek memes, chat and shitposts

- !lemmyshitpost@lemmy.world : Lemmy Shitposts, anything and everything goes.

- !linuxmemes@lemmy.world : Linux themed memes

- !comicstrips@lemmy.world : for those who love comic stories.

"Don't rely on it for anything important" is something uneducated people say, just so you're aware

AI is being used in the field of medicine safely and reliably. It's actively saving people's lives, reducing costs and improving outcomes. If you're not aware of those things it's because you're too lazy or stupid to look them up; you're literally just parroting others' criticisms of chatbots. This is your failure, not AI's.

Aid in medical imaging diagnostics (e.g., detecting anomalies in radiology scans) https://pmc.ncbi.nlm.nih.gov/articles/PMC10487271/

Administrative and documentation (auto paperwork to allow more visitation time between patient and doctor) https://www.thelancet.com/journals/lancet/article/PIIS0140-6736(23)01668-9/fulltext

Population health/predictive analysis https://www.frontiersin.org/journals/medicine/articles/10.3389/fmed.2024.1522554/full

This is an exciting opportunity for you to educate yourself on how AI is changing the landscape. Seems like you've already made your mind up about chatbots and character.ai so maybe there's room in your schedule to learn about something valuable. Good luck! :)

Which is why it should only be used for art.

I don't believe the billionaire dream of robot slaves is going to work nearly as soon as they're promising each other. They want us to buy into the dream without telling us that we'd never be able to afford a personal robot, they aren't for us. They don't want us to have them. The poors are slaves, they don't get slaves. It's all lies, we're not part of the future we're building for them.

"Art".

LLMs are already stopping medical advice to avoid lawsuits.

That's not one of the published use-cases for the AI you're parodying.

If you don't read the manual and follow the instructions, you don't get to complain that the app misbehaved. Ciao~

What exactly should LLMs be used for?

Each LLM is different. You have to read the use cases. Check the documentation, and if you can't find it, try asking ChatGPT :)

While I understand what you're trying to say, it should be on the owner of the software to ensure that the AI won't confidently answer questions it isn't qualified to answer, not on the end user to review the documentation and see if every question they want to ask is one they can trust the AI on.

I disagree for similar reasons.

There's no good case for "I asked a CHAT BOT if I could eat a poisonous mushroom and it said yes" because you could have asked a mycologist or toxicologist. The user is putting themself at risk. It's not up to the software to tell them how to not kill themselves.

If the user is too stupid to know how to use AI, it's not the AI's fault when something goes wrong.

Read the docs. Learn them. Grow from them. And don't eat anything you found growing out of a stump.

Also there are too many cases to cover, so it's impossible. Sure i do it for mushrooms but then i have to do it for other 100 things

what do you mean?

That someone who develop an AI can block their AI from anwering some types of questions but there are too many things than an user can ask, it's unrealistic to being able to cover everthing.

It's unrealistic to expect a software developer to predict every type of ridiculous question a user could ask their software. That's why they don't. Instead, the publish use cases, eg. "Use my app to do X in Y situation" or "this app will do Z repeatedly until A happens". Anything that falls outside of those use cases is an inappropriate use of the app, and the consequences are the fault of the user. Just read the docs, friend :) ciao

Exactly what i am saying